AMD’s Ryzen 9 9950X3D is pretty nippy, isn’t it? With 16 cores, this Zen 5 powerhouse is ideal for content creators and power users; however, if you’re gaming, does enabling SMT result in a performance loss? And come to think of it, what about that second CCD – is it hurting or helping your frame rates?

You see, I’ve recently been experimenting with both the Ryzen 9 9950X3D and the NZXT N9 X890E motherboard (as I’ll be reviewing the board soon), and as I’m exploring the BIOS and software my mind started to wander.

You see, the Ryzen 9 9950X3D processor features two CCDs, each housing 8 CPU cores (8 x 2 = 16 CPU cores in total). Naturally, these cores are SMT enabled; so 16 cores become 32 thread to the operating system (or, 16 physical cores, 32 logical cores if you prefer). I couldn’t help but wonder how scaling was affected in game workloads for SMT and CCDs – because of course, often it would be at the mercy of both the Windows scheduler and AMD’s own software.

If you’re familiar with the general design and features of AMD’s Ryzen 9000 lineup, feel free to skip this intro – but I want to ensure we’re all on the same page.

The Ryzen 9 9950X3D features two CCDs, each housing 8 physical cores, and with SMT offering 32 cores/ 16 total threads. Zen 5-based CCDs share 32MB of L3 cache across those 8 cores, but in the case of X3D parts such as the 9800x3d, an additional 64MB of cache is bolted on (a total of 96MB).

For our Ryzen 9 9950X3D, then, only CCD0 benefits from this additional L3 cache, with the second CCD being essentially ‘vanilla’. So, to be clear, 96MB and 32MB, so the whole processor features 128MB L3 total. As you may know, games are particularly sensitive to vcache (hence why the Ryzen 7 9800X3D is so performant in gaming), and therefore keeping game threads to this CCD on the Ryzen 9 9950X3D is often very important.

There’s also other potential penalities for threads scootching over to the second CCD (such as copy latencies for data), but there is also a bright side of this ‘vanilla’ second CCD for games. If a game is very very CPU intensive and eats up lots of CPU performance, or other applications which are running (eg, say an application updating in the background, or say if you’re encoding something) having those threads spill out onto the second CCD (instead of simply further pegging the 8 cores on the X3D CCD) is… well, only a good thing.

So then, this brings me to why I’m testing: Back in the earlier days of Zen 1 and 2, developers were less familiar with the Ryzen architecture, and also AMD’s own software and scheduler not as advanced so SMT could hurt performance due to resources being shared or threads not being correctly managed.

And, given that in theory the second CCD could help in circumstances which are very CPU heavy, but potentially if the threads spill to that CCD, performance could suffer… well, my brain thought about all of this when starting my testing for the NZXT N9 890e motherboard and couldn’t help but think “well, you know now I am curious”

I will add a quick caveat – there are tools available to help control thread behavior, not least of which is Process Lasso, where you can associate specific applications to specific cores. I’ll likely deep-dive into this in a future article, but for my testing here, I wanted to see how AMD’s chip would handle things in various scenarios without being ‘hand-held’ (so to speak).

In the early days of Ryzen processors (eg, Zen 1/2) I discovered disabling SMT could improve gaming performance, particularly in titles such as Rise of the Tomb Raider (even using DX12). But, that was many years ago, and since then – AMD have improved the processor architecture, and reduced latencies across the chips, worked with microsoft to both improve their own platform drivers and Microsoft are better NUMA aware in software (basically, threads do better at remaining on the right CCD) and that’s to say nothing of developers own experience of AMD CPUs skyrocketing (not least of which due to their presence in the PS5/Xbox Series consoles).

Even so, on some games that didn’t really eat up a ton of threads on the CPU or relied on one or two ‘main threads’ to do the bulk of the lifting, would SMT disabled be a net win?

So how are we testing this?

Damn, that was a long intro – but now the stage is set, here’s how I wanted to stress test these points. I came to the conclusion that 6 different configurations for the Ryzen 9 9950X3D made sense:

- Both CCDs & SMT Enabled: 16 Cores / 32 Threads total split across the two CCDs.

- Both CCD Enabled & SMT Disabled: 16 Cores / 16 Threads Split across the two CCDs

- CCD0 (X3D) Enabled / CCD1 (non-X3D)Disabled & SMT Enabled: 8 Cores / 16 Threads across a Single CCD

- CCD0 (X3D) Enabled / CCD1 (non-X3D) Disabled & SMT Disabled: 8 Cores / 8 Threads across a Single CCD

- CCD0 (X3D) Disabled / CCD1 (non-X3D) Enabled & SMT Enabled: 8 Cores / 16 Threads across a Single CCD

- CCD0 (X3D) Disabled / CCD1 (non-X3D) Enabled & SMT Disabled: 8 Cores / 8 Threads across a Single CCD

Supporting the Ryzen 9 9950X3D then, I’m running an ASUS RTX 5090 Astral with stock settings, and Kingbank DDR5 7600MTs memory. For the Ryzen 9 9950X3D, I left all frequency settings default, but I did a little tweaking with Curve Optimizer Control with an All Core Value setting of -28. As mentioned earlier, the motherboard is an NZXT N9 X890E.

I used a combination of AMD Ryzen Master / the motherboard BIOS to ensure that CCDs / SMT were disabled and behaving correctly, and… errr, oh yeah, Windows 11 for testing across the various games.

Given that the goal is to push as much of the workload onto the CPU as possible, I ran games at 1080P, eliminating the Astral RTX 5090 as being a bottleneck (which, despite Nvidia’s flagship being an absolute powerhouse, higher resolutions start to shift the load a lot). I did, though, opt to use ray tracing on a few key titles (eg, Cyberpunk and Spider-Man 2) because it further hammers the AMD Ryzen 9 9950X3D.

If you’ve eagle eyes, you might spot I ran God of War Ragnarok at 4K with DLSS Performance, which equals an internally rendered resolution of 1080P. This is because, for my game anyway, I simply couldn’t get it to go into full-screen display at 1080P resolution.

DLSS (or any other upscaling) wasn’t enabled for games other than God of War, and it goes without saying Frame Generation was also turned off, and given it’s a test system, other applications were kept barebones (eg, no Discord popping up).

Starting things out with good ol’e Counter-Strike 2, I ran with all settings at their highest and opted to stick to 1440P for the resolution. The main standout here is the difference between Vanilla and any variant of the X3D cores. For example, the 1P frame rates for Ancient are around 150 for SMT on the Vanilla CCD, but the same test with SMT enabled on all 16 cores on the Ryzen 9 9950X3D nudged performance to 240.

The performance with 2CCDs enabled versus just the X3D was pretty close to margin of error stuff, and with all the cores enabled SMT on versus off also made little difference for Zen 5. Vanilla did show a smaller improvement with SMT off vs on.

Cyberpunk 2077 is next, with maxed settings – albeit I plonked all Ray Tracing settings to just medium, using a native resolution of 1080P and so no frame-generation or upscaling of any kind. Here, our Ryzen 9 9950X3D had similar results for single X3D CCD and both CCDs. For the benchmark scenario, I was driving around a section of Night City, and while the starting location isn’t too dense, this rapidly changes. With this, CPU usage shoots up. SMT off does make quite a difference here, dropping 17FPS when the single v-cached CCD is available; 8 threads available isn’t enough, and performance suffers.

Again, with the solo ‘vanilla’ CCD running either SMT on or off was significantly worse than the X3D only or both CCDs; with SMT off noticeably barely clawing over 60fps in 1 percents.

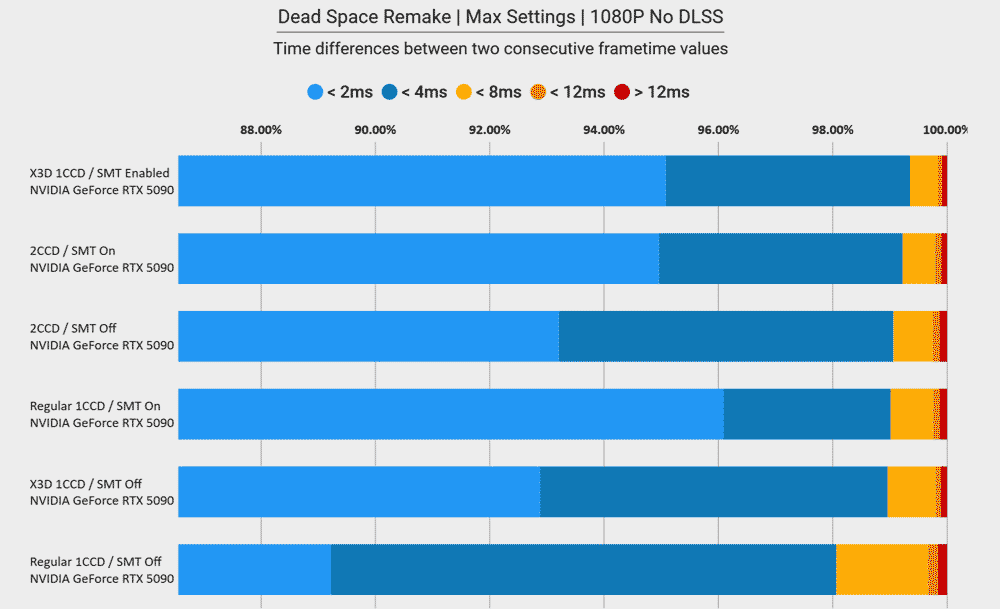

Dead Space Remake is a game I wish performed better in the marketplace so we got a remake of the second game, or a new entry into the series – but my frustrations of lack of a remake of the fantastic sequel aside, performance for Dead Space 1 Remake is solid. The game isn’t immensely demanding on CPU or GPU for that matter, but once again, SMT enabled was a slight win. Both CCDs versus X3D CCD came out to margin of error values really.

DOOM The Dark Ages is a beautiful game, and here I ran the title with everything set to Ultra Nightmare, with path tracing disabled and sticking again to 1080P. I used the built-in benchmark for easy repeatability and chose the ‘Siege’ level. Indeed, the opening few levels of Doom The Dark Ages are tighter environments, but this all changes in The Siege, with large and open environments, lots of enemies on screen and huge amounts of effects, geometry, and… well, I think you get the point.

Curiously, X3D SMT came in at number one, with SMT off for the same configuration dropping to fourth, but second and third were 2x CCD SMT off and SMT on, respectively. It was all pretty close though, with 2CCD SMT on and off separated by just a couple of frames, and SMT off and on for the X3D-enabled CCD the largest gap between these top four results, about ten FPS between averages.

The ‘Vanilla only’ CCD bought up the rear; curiously SMT off here performed a few frames per second better. I did run these results a couple of times and achieved similar numbers, so I don’t know if it’s something hinky with my setup; or whether in this case the additional logical threads (SMT) are gobbling up lots of L3 cache, so while more threads would help to spread work from the engine, it’s negatively offset by having less ‘space’ on L3 cache (again, 32MB vs 96 of the V Cache variant, which performed better with SMT on).

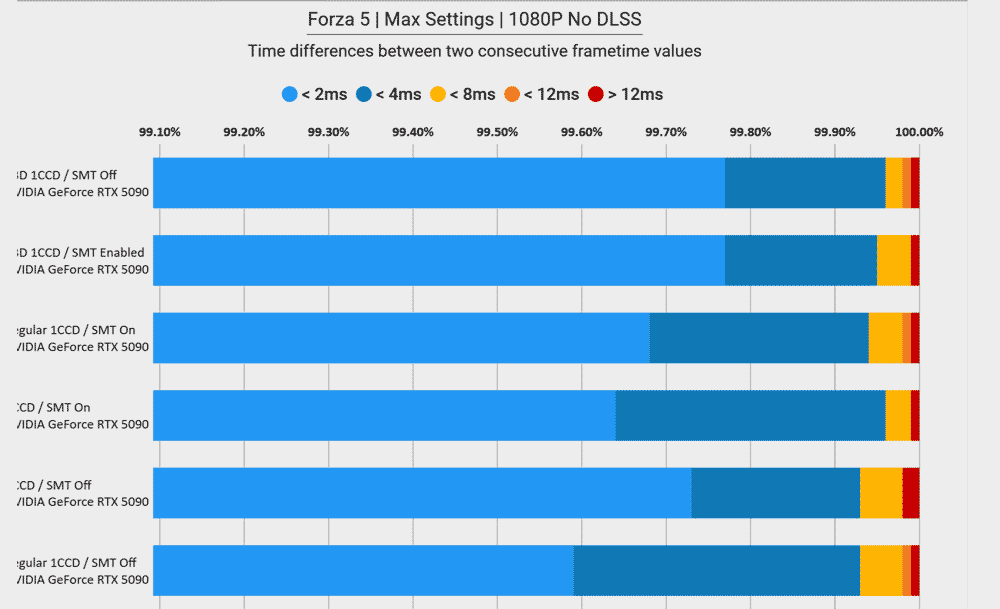

Forza 5 – with default max settings (yes, that includes RT), running at 1080P and of course, using the titles built-in benchmark for easy repeatability. The top 3 spots fall within the margin of error for average frame rates, though a slightly larger gap emerges for the 1 percent. Much like Doom the Dark Ages, the vanilla CCD performs better with SMT off instead of on; and again, just like Doom, the X3D only test did better with SMT on versus off.

God of War Ragnarok is set to max settings with a manual run of a fairly repeatable section not too long into the campaign. Unfortunately GOW:R wouldn’t work for me at 1080P; I would get scaling issues no matter the ‘screen mode’ I chose, so I ran with DLSS in performance mode with a 4k output. This allows the game to internally render at 1080P, keeping the GPU’s bottleneck out of the equation. Naturally, no DLSS / FSR frame generation is used.

SMT off for all configurations of CCDs (X3D only, Vanilla only, or both enabled) performed worse; so yet again, keeping SMT enabled was a net benefit to the game’s performance. For the X3D only or two CCDs – averages show essentially the same result, but the 1 percent did favor just the X3D only configuration.

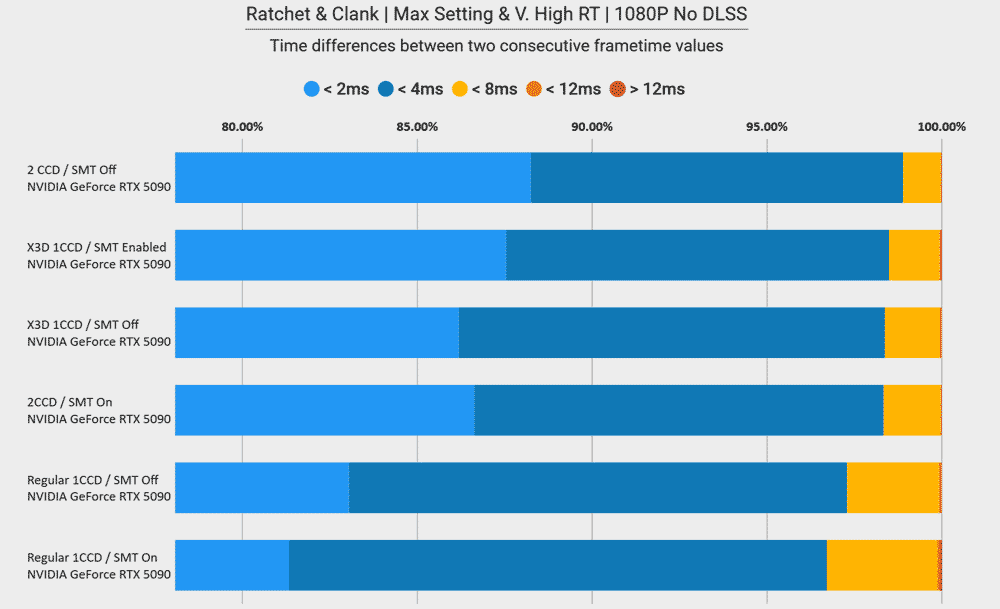

Another Sony title; Ratchet & Clank Rift Apart, was a game released early in the PS5’s life cycle and fortunately, benefited from a fantastic PC port. I am running the game with the graphics and RT max preset for ease. As always (well, almost always), 1080P is selected for the resolution, and we ensure any form of frame generation is disabled. SMT-enabled wins here across the board, with the 16 cores / 32 thread configuration of the Ryzen 9 9950X proving to be the victor.

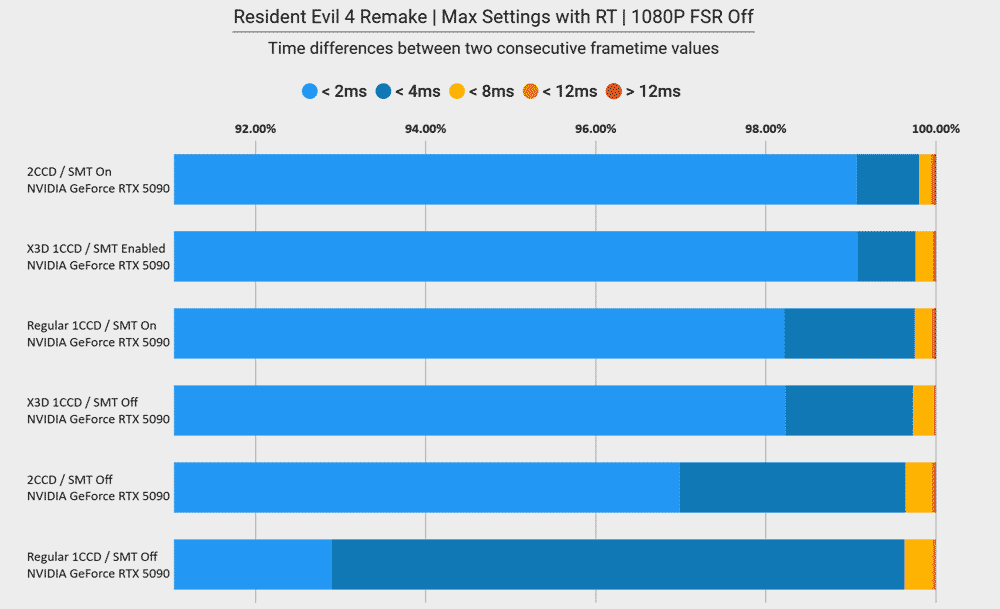

Resident Evil 4 Remake is a great game, and the RE engine does a great job of running it to. I ran the game at max settings, sticking to 1080P with ray tracing enabled and max texture size. Once again- SMT off performed better when only the ‘regular’ CCD was enabled, but also the same could be said with the X3D only too.

Also, I’ll confess the non-VCache performance being so much lower for Resident Evil 4 remake had me raise an eyebrow; so much so, I reran the test a couple of times but with the same results (roughly speaking).

Spider-man 2 is the last of the Sony games I’ve tested here – and like Ratchet and Clank is a visually fantastic PC port; though when all settings are maxed out (particularly ray reconstruction) it can be very demanding. It also suffered a little more than Ratchet and Clank at launch, with users reporting stuttering and other problems. Patches have somewhat helped – but it certainly was a big miss from Sony.

Regardless, we’ll test here by setting RT to high – a few settings below max and forgoing RT reconstruction too. The regular rasterized graphics settings (eg textures) are all set to their highest though. And the resolution? You guessed it, 1080P and any and all frame generation disabled.

Two CCDs come out on top; albeit with SMT disabled. Enabling it costs about ten FPS on average, and around 14 FPS is shaved off by enabling SMT when only the X3D CCD is active. So yeah, this is one game SMT off seems to win across the board, including the ‘vanilla’ CCD only (albeit by the smallest margin).

Finally – Stalker 2; again 1080P with max settings, with a manual run at the start of the game where you first enter the sewers and meet the first anomaly. The Ryzen 9 9950X3D shows again that, in terms of raw performance, AMD’s decision to embrace cache continues to pay off. SMT on vs off slightly favors SMT enabled, typically, but frankly, it’s a completely margin of error stuff.

But what about older games?

Games dating back 10 years (especially single player titles) will perform well on just about any modern CPU, but I couldn’t help myself: I had to know how a few games would perform on Zen 5; what the games would think of SMT on a Ryzen 9000 series CPU, and I also had a lot of questions regarding the benefits of X3D too.

Like I said in the opening paragraphs of this very article, Ryzen 1000 and 3000 series processors both improved their performance decently in games with SMT disabled (due to developers being unfamiliar with the architecture of Zen, and again, that pesky Windows scheduler). Rise of the Tomb Raider was one of the worst culprits for this – that (then new) and fancy DirectX 12 didn’t do much to help either. So, I couldn’t leave testing here; I had to go back to the older game.

Of course, this isn’t a direct apples-to-apples comparison: the software (Witcher 3 especially) has benefited from a ton of software updates since its debut; and that’s to say nothing of once again, Windows and AMD’s own efforts to improve scheduling.

Regardless, the results here are pretty interesting.

For Rise of the Tomb Raider’s performance data, I used my old favorite benchmark spot (and arguably, one of the best areas of the game to explore, as it still looks gorgeous even now, Geothermal Valley) in a manual run between two campsites. I tested, of course, at 1080P, with the very high preset. For your consideration: there are actually more demanding settings for a few options if you manually select them one-by-one; but for consistency (and frankly ease of repeatability), I stuck with the very high preset.

Secondly, I used DirectX 12; this title was at the dawn of DX12 support, and so many titles could choose to stay with the legacy DX11 API or ‘experimenent’ with the newer and shinier option. What’s pretty funny is selecting both the V. High settings and DX12 bring up their own warning dialogues; the Very High settings suggest a min of 4GB (so I think the RTX 5090 should be okay), and selecting DX12 informs me it can perform worse in some setups. Who knows – maybe I’ll do DX11 vs 12 testing in the future, but for now, the results here are consistent with what we’ve seen from newer games.

SMT remains a bonus in performance, and even with two CCDs this is the case – quite simply, the OS manages to ‘pin’ threads to the X3D CCD rather than them ‘spilling over’ (generally speaking) provides a nice performance bump.

And for Witcher 3 – SMT on for the single X3D CCD ‘wins’, though the difference in average FPS for the top 2 – 3 results is close to the margin of error (perhaps even 4 results). The 1 percent is a little clearer, however. The obvious loser here is the ‘regular’ CCD, without the benefit of vCache; performance is significantly worse, around 40FPS separating it and the X3D result at the top.

For Witcher 3, I did a manual run (or should I say horse ride) near the beginning of the game into and then out of a town. Ray Tracing preset was chosen, but not the highest one as it starts to hit the Nvidia RTX 5090 pretty hard, even at just 1080P, and while it wasn’t quite maxing out GPU usage, it was enough where I was concerned it could be a limiting factor for a second here or there.

So, what conclusions can we draw: SMT on vs Off & Disable a CCD?

Well, I think the real takeaway here is that ultimately, even if you don’t tweak anything with AMD’s Ryzen 9 9950X3D (and by anything, I mean curve optimizer, tweaking any settings other than memory speeds), the chip performs absolutely great.

But, for our results, we have to start somewhere, and I suppose the CCD results are a good a place as any. AMD’s V Cache technology is really is an impressive benefit to performance, and CCD 0 by itself or both CCDs enabled stomps the CCD1 (non-v cached) chip over and over again, as you would expect.

AMD’s Ryzen 9 9950X3D does a pretty good job of keeping threads of games and other demanding software pinned to CCD0 (the vCache chip) with everything set to default, and while users can mess around with thread infinity (or use apps such as Process Lasso, or do what I did, and disable SMT or CCDs), really it seems… kinda pointless for most users.

Drilling deeper: yes – a few games do benefit from the second CCD disabled, but many don’t, and in the games and testing I did, even games which did perform better, such as Counter-Strike, the difference is pretty modest.

Also: in the real world, most users don’t just have Windows 11, performance capture software, Steam whichever game they’re playing running. Instead, in the real world, you might well have Discord, web browsers, Excel (shudder) or a dozen other applications open, each vying for some measure of CPU resources.

The story is similar for SMT; in most games, SMT is a net benefit, and even in a game such as Spiderman 2, the loss in performance with SMT enabled is noticeable, but in the grand scheme of things not that big. Not least of which because this is 1080P testing on a 5090; if you’re more GPU limited things would change, and a lot too.

I would encourage the hardcore among you, of course, to tweak and test things out, as this isn’t a full and comprehensive list of all games, I’m sure there are some I’m missing where results could a bigger impact (if so, feel free to reach out and contact me!). And besides, a reboot is all that it takes to flip a setting back after all, but I’m left with the conclusion that if I were making a blanket statement to the vast majority of users who just want to play a game… don’t worry about it.

That’s to say nothing of the pain in the ass factor: Let’s assume you do more than game and want to use your system for Photoshop or programming and development, or just about anything else – well, SMT (or that other CCD) is of course a huge positive for performance, and yes, you could reboot to re-enable it, but that also means having to close work or web browsers or games etc. Basically, what I’m saying is: it’s a fun experiment, but I can’t imagine this being appealing to the vast majority of users who simply want to game and then get back to real-life work.

It’s certainly been interesting to see how AMD’s SMT and CCDs scale for gaming workloads; and hopefully you agree!