Resolution & Frame Rate How They Work & Analysis On Impact in Games |Tech Tribunal

CrimsonRayne 11th June 2014 0 CommentsSince the Playstation 4 or Xbox One’s specs were leaked online both gamer’s and media have placed a lot of their focus on both the resolution a game runs at, along with its frame rate. Much of the the Xbox One vs Playstation 4 debate revolves around resolution and if a game runs at 60FPS or ‘just’ 30FPS, along with the resolution the title is rendered in. Due to 1080P TV’s now being normal for most homes, and players having big expectations of massive improvements over the gameplay and graphics of the previous generation we thought it’d be a good time to go through with you exactly how frame rate and resolution work and what impact they have in your gaming life. While many gamer’s do understand the concepts we’ll be going into the depths of the debate, and for equal amount of you who just know that a ‘bigger number is better’ we’ll explain everything in detail. And of course we’ll answer the major question – how much of a difference does it really make to your gaming experience?

There are things we’ve got to understand before we begin – the first is that neither the Playstation 4 or Xbox One have been out that long. At the time of writing, both systems are barely half a year old, and there’s a lot more life left to be squeezed out of their GPU’s, CPU’s and Memory. This can be achieved in a multitude of different ways, a few examples would be, better SDK’s (Source Development Kits which developers will use to create the games on the respective hardware), improved API”s (Application Programming Interface – basically how the game application ‘talks’ to the hardware) and various system level improvements – for example reduction in OS overhead, improved drivers and other bits and bobs.

Then there’s just other factors which neither Sony or Microsoft can control or directly influence, and fall directly on the shoulders of the development studios of the game. This means Sony or Microsoft can only guide the studio and help them understand the hardware better. These factors include better experience with the hardware, improvements to engines (remember, engines such as the ever popular Unreal engine are constantly being improved) and better understandings on multi-threading. While improving the efficiency code runs won’t make the specs ‘higher’ in performance (a 1.84TFLOP GPU isn’t going to magically grow to 2.4TFLOPS) you’re making much better usage of the performance that is there, rather than wasting it.

What Is Frame Rate and How Does It Work?

Frame Rate (commonly known as FPS = Frames Per Second) in it’s simplest form is the speed the individual images (frames) are shown on the screen, and is usually measured per second. So in the case of 30FPS, it means thirty unique frames should be shown on screen each and every second for the frame rate to be constant. Frame Rates vary greatly depending upon both media and the application within media, for instance the frame rate of most films is only 24 frames per second, while most TV shows are running at at least 30FPS, on the other hand – some movies such as the Hobbit was shown at 48FPS. In most instances, a video game developer will want to hit a fairly at least 30FPS consistently as you play the game. It’s extremely important to remember that frame rate is a very different thing from refresh rate. Refresh rate is the speed that the screen itself updates, and most traditional flat screens refresh up to 60HZ (so 60 times per second). Some screens now however on PC can be 120HZ or even higher. The device hooked up to the screen however might only be putting out 20 unique frames per second – in other words, far at half the speed.

The faster the frame rate being targeted, the less time a GPU (and the system the game is running on) has to ‘render’ (draw) each individual frame of animation. The more complex a scene, the slower the console will render each frame of animation as it has to take longer to effectively ‘draw’ the frame. The basics of this work fairly simply. For a game to achieve 60 FPS the title needs to maintain an average of a new frame being rendered every 16.66ms (milliseconds). You can easily do this math yourself – take 1000 (the number of ms in a second) and then simply divide by the frame rate – in this case, 60. Similarly if we want to find out the average draw time of 30FPS we could go ahead and do the math of 1000 / 30 which would produce the result of 33.33ms per frame of animation.

I decided to reach out to Robert Hallock, who serves as Technical Communications, Desktop Gaming & Graphics over at AMD, “It is strictly my personal opinion that 30 FPS is the minimum acceptable framerate that I would consider playable, but I prefer 45-60 FPS in my own gaming.”

Increased frame rates help to provide a ‘smoother’ looking experience, and also reduce the ‘lag’ associated with controls (we’ll get to that) but also means that the GPU has significantly less time to produce pretty looking graphics. It’s a trade off a developer needs to make for consoles, and while PC gamer’s can certainly lower graphic settings, resolution or overclock their hardware or replace a below par GPU, console devs are ultimately the ones left responsible to ensure that the game runs and looks the best that it can on the system.

It’s also worth remembering that different areas of the game, different lighting conditions, different levels of action on screen and so on can massively impact the frame rate of a title. For example, if you’re play a game and just looking a fairly uninteresting gray wall, the system you’re playing on doesn’t have its work exactly ‘cut out’. On the other hand, if you step out of the building and start firing your RPG at the various enemies, causing huge explosions the system’s workload increases massively. Similarly speaking,

Robert Hallock “Input latency is the time it takes for a user’s input to be rendered on-screen. Input latency has several factors: how much v-sync buffering is being done? More buffering eliminates juddering and tearing, but also increases input latency by a few frames. How fast is the monitor’s refresh rate? Higher refreshes will display mouse movement more promptly. How fast is the mouse’s polling rate? Higher polling rates will enable more accurate movement and more promptly convey movement to the system. I’m not a gamer that’s especially sensitive to input latency, unless it’s particularly egregious, nor do I play games that require precise frame timing (e.g. Street Fighter).”

Much of this also comes down to V-Sync, which PC gamer’s have known about for a long time. V-Sync (Vertical Sync) is if the game ignores the monitors refresh rate and throws out frames as fast as possible. This can lead to screen tearing as frames are being half shown on screen. Enabling V-Sync at first glance looks a hell of a lot prettier, but there’s a dark side to this – if the frame rate isn’t at the refresh cap (so for instance, on PC you’ve got your V-Sync enabled on a 60hz monitor, and your GPU only manages to spit out 43 frames per second in one area) you can be on the wrong end of input latency or juddering.

So you might now be asking why 30FPS doesn’t have the problems associated with not hitting the 60FPS – in other words drastically higher input latency or random juddering. Well, that’s because the frame rate divides exactly by half of the refresh rate of the monitor. In other words, 30FPS is exactly half of 60 hz – meaning no trouble. So a frame is shown for twice the length of time on screen compared to a 60FPS title. We see a frame on screen for the explained above 33.33 ms instead of 16.66 ms. This multiple of 30 rule also transfers to the 120hz monitors too – just so you know.

For PC gaming, companies are pushing technologies to provide the benefits of both V-Sync on and off, these technologies (so far) are: Free-Sync (AMD), G-Sync (Nvidia) or VESA’s Adaptive-Sync. Though each of these technologies does work a little differently, the idea behind them is pretty similar – to remove / greatly reduce stuttering and visual tearing by syncing the monitor’s refresh with the creation of a new frame from the GPU. Effectively this means that the monitor only updates when there’s a new frame to display from the GPU, meaning a more consistent experience. Obviously there are some limits imposed – for example, it could refresh faster than the screen can permit, and also doesn’t refresh slower than 30hz, otherwise you’d start to perceive flickering.

What is Resolution and How Does It Work?

Resolution is quite simply the ‘size’ of the image, and is measured in width and height. It’s important to note there are a few types of resolution, native (or sometimes referred to as the internal rendered resolution) and the output resolution. These two figures can vary greatly, but we’ll discuss their meaning in just a moment. Video games today are primarily created for the 16:9 widescreen format (since those are the most popular type of TV’s and monitors now), and this means that an image that has the resolution of 1080P is 1920 (width) x 1080 (height).

The scale in difference between pixels in 1920×1080 is the same for basically any 16:9 image – for example, when a game is rendered at 720P, it’ll be running at 1280×720. You can use the following math to figure out the width or height. Let’s assume a developer says that a game is rendered at 900P, given our knowledge we can take 900 and multiply it by 1.78 (technically it’s 1.777777777777778 – but 1.78 is ‘close enough’ to give you a basic approximation) and that’ll give you the width. In this case it would mean that a 900P image is 1600 x 900. While a few games aren’t rendered in 16:9 (a few indie titles are still 5:4 and so will have resolutions like 1024×768) they’re the exception now rather than the rule.

Unlike old screens, modern TV’s, much like PC monitors have for… well, a long time – handle everything in Progress Scan (which is what the P stands for in say 1080P). This is a bit different from the old “I” method (I standing for interlaced). The difference for gamer’s and consumers is fairly simply – P is better than I. P means that the image is refreshed from top to bottom all in a single cycle (a single hz) while Interlaced does it in a kind of two pass manner. It first refreshes the ‘odd’ lines then the even lines. There was some debate back when displays could only handle up to 1080I if it were better to select 720P vs 1080I, and the general rule back then was P was better for games / fast moving media, while interlaced suited slower moving images a little nicer. Now it’s not an issue, as almost all TV’s and monitors can handle 1080P without any issues.

There are other factors which come into this, including pixel density – another quote from Robert Hallock over at AMD states “In simplest terms, a monitor’s resolution is a figure that expresses the number of horizontal pixels and the number of vertical pixels. For example, 1920×1080 means there are 1920 pixels horizontally, and 1080 pixels vertically. Pixel density refers to how tightly those pixels are packed together within the physical size of the LCD. You can increase pixel density in two ways: shrink the physical size of the LCD, or increase the number of pixels in the LCD. (You could also do both, but I’m not sure why you’d want to.) High pixel densities impart more clarity and sharpness in the display, which is why a 4K/30” monitor—even at 30”—is the highest PPI of any desktop display I can think of at ~146 PPI.”

1080P vs 720P – though the difference might not ‘sound’ that big (you might be tempted to simply take 1080 and minus 720 and be left with 360 pixels and then say ‘oh, that’s all the difference is’) but you’d be wrong. In fact, you’d need to take 1920 and multiply it by 1080, giving you a figure of 2,073,600 pixels. If we take 1280 and multiply that by 720 we come up with a figure of ‘only’ 921,600 pixels – a rather large difference. If we then take the 2,073,600 pixels and divide by the 921,600 we come with with a figure of 2.25. This means that effectively the difference between 1080P and 720P is round 2.25 more pixels more pixels in favor of 1080p- quite the number.

For your ease of reference we’ve provided a table showing off the difference in pixels between various resolutions. This isn’t a ‘complete’ list but should provide you an overview of the common resolutions so you can understand how ‘large’ each of the images are, and their difference in size compared to the previous resolution on the list.

| Resolution | Total pixel count | Size difference vs the previous resolution |

| 1280 x 720 | 921,600 Pixels | |

| 1408 x 792 | 1,115,136 Pixels | 1.21x greater than 720P |

| 1600 x 900 | 1,440,000 Pixels | 1.29x more pixels than 792P |

| 1920 x 1080 | 2,073,600 Pixels | 1.44 more pixels than 900P |

| 2560 x 1440 | 3,686,400 | 1.78 more pixels than 1080P |

| 3840 x 2160 | 8,294,400 | 2.25 Pixels more than 1440P. 4x more pixels than 1080P! |

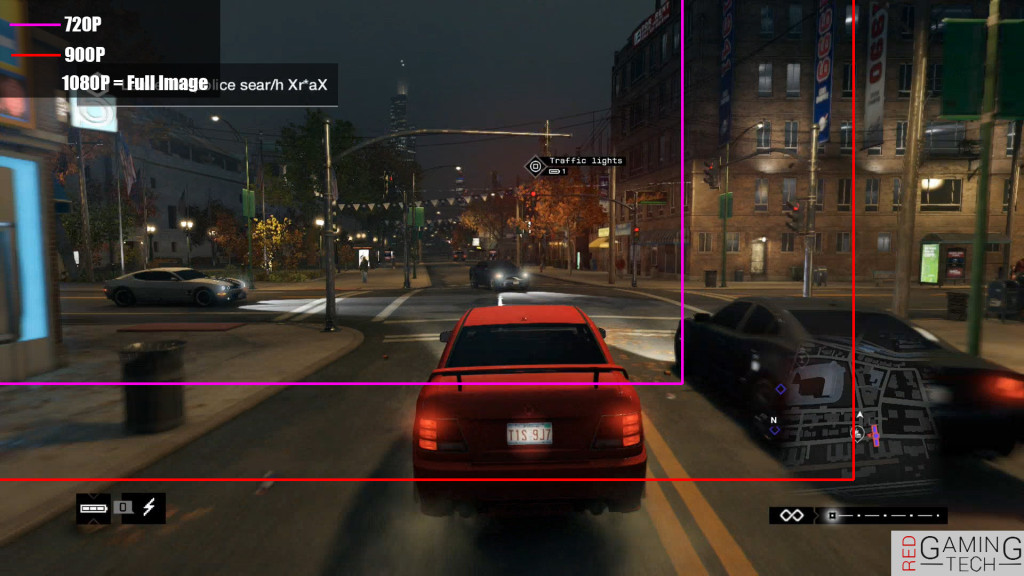

So now we’re at the point where we know that higher resolution is a good thing, the next thing we need to remember is that not all resolutions are created equally. For graphical quality, there are two resolutions – the native (rendered) resolution and the outputted resolution. The first is pretty simply – it’s the resolution that the systems GPU (Graphics Processing Unit) renders (draws) the game in. So for example, in the case of Watch Dogs for the Playstation 4, we know that the game is rendered on the machine at 900P, or as we now understand it – 1600×900 – meaning a total of 1,440,000 pixels. Easy, right?

The Xbox One version of Watch Dogs meanwhile renders the title at a lower resolution, 792 pixels – or, 1408 x 792 pixels. Yet, if you were to look at the screen, you’ll notice that neither the X1 or PS4 have black bars around the screen. And you might be thinking “But I’ve selected 1080P for my console”, so where are these extra 630K or so pixels coming from? Quite simply, the images are ‘scaled’ and stretched to be bigger by the GPU’s of both consoles. They simply ‘upscale’ the image, and stretch it to fit the display.

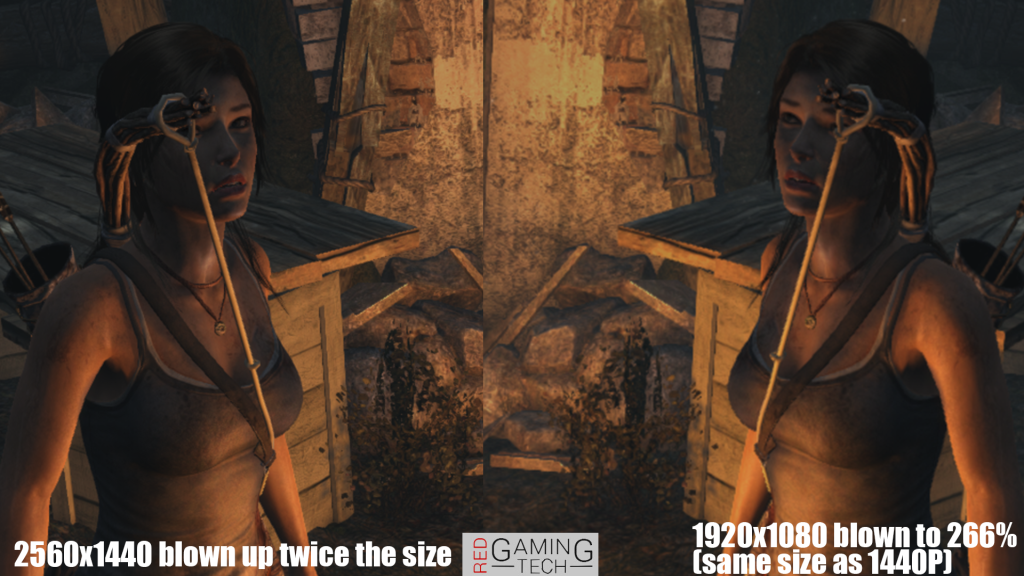

It’s important to remember that the GPU of both the PS4 and X1 typically do a much better job in upscaling the image than a TV generally would. But you’ll still find a lower quality than a ‘native’ image – in the end, all up scaling is doing is doing its best to stretch the image to your TV’s higher resolution. You can understand how this works by taking a random photo with a digital camera at a resolution of say 720P, and then bringing it into an image manipulation application and resizing it to 1080P. While GPU’s generally do a great job at upscaling, they’re not increasing the resolution the game was rendered at.

Finally, remember the ‘crispness’ of an image isn’t necessarily down purely to resolution, other factors do include Anti-Aliasing techniques. FXAA for instance often does cause ‘blurring’ of edges and finer details, and while it’s very cheap to apply to a scene compared to MSAA or SSAA, image quality can suffer as a result in comparison. It’s still generally vastly preferred over no AA usage however.

How Frame Rate & Resolution Impact Each Other

It’s important to take into consideration a lower frame rate, unlike resolution isn’t always the fault of the GPU. GPU bound for instance, is a case where the GPU simply is working as hard as it can but cannot keep up with the demands placed upon it. In these cases, lowering the resolution or other graphical effects (or performing optimization) can help alleviate the workload placed upon the video card. In these instances. If you’re GPU bound you’re of course going to slow down the frame rate – because the GPU simply cannot draw a new frame fast enough because you’re asking too much from it.

The opposite of this is being CPU bound and creates quite a different scenario and often doesn’t impact the resolution even slightly. CPU bound is a case where the CPU simply cannot issue commands to the GPU fast enough. The Graphics Processing Unit is in fact rather dumb, and the CPU dispatches “Draw Calls” to the GPU to tell it what to draw (be it a box, a blade of grass, a texture – whatever really). In these cases, the GPU isn’t the limiting factor and so a higher resolution can be used because the GPU isn’t the one that’s struggling and lowering the frame rate, the CPU is the culprit.

With the release of both Microsoft’s Xbox One and Sony’s Playstation 4 has placed much of the focus on the GPU and memory bandwidth of both machines, the CPU’s must also come under some level of scrutiny. Both machines use low power AMD Jaguars, running at 1.75GHZ for the Xbox One and similar speeds for the PS4 (we’re still unsure of the PS4’s exact CPU clock speed). The Jaguar’s are X86-64 in nature, comprising of two modules, each module housing four cores (meaning eight CPU cores total). Two of these cores are reserved for OS functionality – meaning game developers are left with six CPU cores to use for their titles.

Poor optimization means one CPU core (let’s say core zero) will do a lot more work than the other five cores, so its utilization will be at 100 percent. The other remaining cores however may only be hovering around say 50 percent – meaning effectively a huge amount of CPU performance is going to waste. While this issue isn’t as much of a problem with consoles as it is for PC, it does still exist and is forcing developers to do a lot of code re-writing to ensure they’re making use of these lower performance cores.

Naughty Dog have stated how they’re using switching to the multi threaded approach of the PS4, while Sucker Punch have Gone so far as saying the CPU was the weak area they’ve come across so far while developing for the PS4′. Games developers (particularly if Sony, AMD and Nvidia have their way) are likely to start pushing more and more functions onto GPGPU computing. At the very least, developers will be required to getting the most out of the multi-cored nature of both systems, and therefore understanding how best to make use of the light weight CPU cores.

What else Can Affect Resolution or Frame Rate?

We’ve discussed a few of the basics already, CPU and GPU performance. But since we’re trying to be as clear as possible in this article, there are a bunch of other things we need to take into account. Memory Bandwidth and RAM as a whole play a crucial role. Memory Bandwidth is fairly simple – it’s how much ‘data’ you’re capable of throwing around the system. The great the bandwidth the faster you can feed the CPU, GPU and other components with data. If you’re unable to push the data to say the GPU fast enough it will suffer. In these cases, let’s say you’ve got a memory bandwidth of 40GB/s (which is super low, but it’s just a number to use as an example). Your GPU produces 1TFLOP of performance. Let’s assume for the application you’re memory bandwidth constrained – if you were to overclock this same GPU by 25 percent, there’s a great chance you’d find little increase in performance in titles. The data just isn’t being sent fast enough and so the GPU is simply spending a lot of its time ‘waiting’ to process new data.

There have been several famous examples of this from the ‘old’ days of PC gaming, a notorious card for this was Nvidia’s original GeForce 256 SDR – which used only Single Data RAM. A DDR variant came out a little later on and frame rates went up rather a poor performance (check out a really old review over at Tomshardware for an example if you’re curious).

Poor optimization is another factor – quite simply put, devs not understanding the hardware (or not having the time to optimize their code on the hardware), or even poor SDK’s meaning bad performance. There have been numerous examples of bad SDK’s for consoles – Sega’s Saturn had incredibly bad SDK’s released, meaning developers were often only using one of the CPU’s and one of the VDP’s in the machine, meaning its ports were far worse than the original Playstation. Sony then did a similar thing with the PS3 – with its Cell processor, Memory configuration and GPU being super difficult to use – and worse still, very poor SDK’s at launch meant developers struggled a lot. This led to a lot of delays on titles for the PS3, and particularly early on in the 360 vs PS3 ‘war’ the 360 versions usually being far superior. Sony did manage to fix much of this however.

RAM amount is another big cause for concern, for example on a PC if your GPU hasn’t got enough video memory, you’ll notice that overall the frame rate will often appear high, but randomly tank. This is often due to insufficient space in VRAM and data constantly being swapped over the PCIE bus.

Clearly other issues include in game graphical settings, Anti-Aliasing, and a dozen other examples.

Frame Rate & Resolution And Why Developers Create Games at 30FPS

The frame rate doesn’t show up in the art work of the game, nor can you tell how smoothly a title runs while you’re looking at the back of the box and trying to figure out which game you’re going to buy. Back in 2009 Insomniac Games did some research with their fans and market regarding frame rate. They’d confirmed that generally speaking a higher frame rate didn’t significantly impact either the sales figures or the review figures of the game.

“Framerate should be as consistent as possible and should never interfere with the game. However, a drop in framerate is interestingly seen by some players as a reward for creating or forcing a complex setup in which a lot of things must happen on the screen at once. As in, “Damn! Did you see that? That was crazy!” says Insomniac in a blog post on their website.

Generally speaking, developers will wish to figure out mechanics and frame rate first, then work out resolution they can squeeze out of the machines after they’ve optimized the code. Think about it – if they were to design the game saying “1080P only… make it look as good as possible” we’d have titles running at 3FPS. Of course this isn’t always the case, but generally frame rate and gameplay have the highest priority. Developers generally announce the frame rate and resolution of a game later – after the initial screen shots and trailers have been shown off. Often times however, even after we know what the frame rate is (they’ll announce they’re shooting for say 30FPS for instance) the resolution will be the thing which difference between versions. It’s pretty easy for them to adjust it by playing around with the configs of the game, and then figure out what’s the best balance for graphical sharpness, gameplay and maintaining a smooth frame rate experience for the gamer.

Nate Fox, The Director of the PS4’s Infamous Second Son (Created by Sucker Punch Productions) was asked in an interview why the title ran at 30 FPS, instead of the ‘hoped and expected’ 60. If you’ve been following along with the article, there’s a good chance Nate’s answer won’t come as a surprise.

“What would you expect would go away in a game? Resolution seems like the obvious thing.” was the question posed by Nate to the interviewer, “You need people on the streets, or you don’t feel like the hero, unless you’re saving people, you’re just some crazy super killer. I wouldn’t want to compromise any of the particle effects, as I think that’s what makes Delsin’s powers look alive and real… and you can’t sacrifice any of that Cruising around town and using those abilities is the chief joy of the game, so that’s not going to be it, and we’re certainly not going to cut down on the detail in the world.”

Certainly, Sucker Punch did an extremely impressive job with Infamous Second Son – particularly when you consider that it was a launch title for the next generation. Read more of our Second Son graphical analysis here.

Dana Jav, from Ready at Dawn (Developers of the Playstation 4 exclusive The Order: 1886) said in a recent interview with Kotaku “60 fps is really responsive and really cool. I enjoy playing games in 60 fps, but one thing that really changes is the aesthetic of the game in 60 fps. We’re going for this filmic look, so one thing that we knew immediately was films run at 24 fps. We’re gonna run at 30 because 24 fps does not feel good to play. So there’s one concession in terms of making it aesthetically pleasing, because it just has to feel good to play

“If you push that to 60, and you have it look the way we do, it actually would end up looking like something on the Discovery Channel, like an HDTV kind of segment or a sci-fi original movie maybe. Which doesn’t quite have the kind of look and texture that we want from a movie. The escapism you get from a cinematic film image is just totally different than what you get from television framing, so that was something we took into consideration.

Personally, I completely disagree with this statement – as we’ve discussed earlier (and Robert Hallock pointed too) input lag isn’t your friend – and higher frame rates help to reduce this. In all of my years of gaming, I don’t think I’ve ever spoken to someone who thought a lower frame rate was better. There are however some exceptions – for example, locking a frame rate to a solid thirty rather than the having it wave between 30 and say the mid 40’s can look nicer (and remove tearing) as we’ve discussed above.

That being said, The Order 1886 isn’t finished yet, and the developers are clearly pushing for a certain look. If they feel that the best compromise between graphics, gameplay and control is 30FPS for their title then that’s their choice.

The Future of Resolution & Frame Rate

On the previous generation (the Xbox 360 and Playstation 3) titles generally ran at 720P at the start (with some exceptions running at 1080P natively). However, towards the end of the consoles life span many titles were running sub 720P internally – simply because the hardware didn’t evolve over time. There’s only a certain amount of optimization that you can push onto a fixed platform such as a console. While Assassin’s Creed titles generally ran at 720P, on both the 360 and PS3, other titles such as Call of Duty: Black Ops 2 ran at 880×720 with post Anti-Aliasing applied.

With all of that said, it’s fair to say that the last generation of titles released, say Last of Us on the PS3, did look much better than the first efforts as developers got used to the hardware and they, along with the developer of the hardware pushed the hardware to its complete limits.

“The push for 4K is probably equal parts “because we can,” “because it looks better than 1080p,” and “because 1080p is woefully underwhelming for today’s best GPUs.” Whatever part is ultimately responsible, I’m all for it because I’m totally smitten with a future that has more 4K monitors in it. It’s hard to understand how tremendous an UltraHD display is until you sit down and play with one yourself, but my goodness the sharpness, clarity and detail is just otherworldly compared to any other display I’ve ever used,” says Robert Hallock when I asked him his thoughts on the future of resolution and gaming for the PC.” Says Robert Hallock.

“We’re already at the limit of what the industry offers in terms of supporting advanced display technologies. We support 4K60 SST over DisplayPort 1.2a, which is the maximum bandwidth permitted by a single DP cable. Certainly there are no higher resolutions or faster refresh rates on the horizon without some change in spec. We also have FreeSync, which basically eliminates input latency as a function of synchronizing the FPS of the GPU to the refresh rate of the monitor. That will appear in retail monitors 6-12 months from today. But as with any quest for more visuals or better performance, there are only two routes forward: faster hardware and smarter software. The Radeon division is always in pursuit of the former as a matter of business, and the latter is addressed by transformative developments like Mantle,” he finishes his muse.

For PC owners, 1080P if you’ve a good and decent GPU is generally a non issue, with even a $750 dollar machine capable of destroying most titles at 1080P / 60FPS as we discussed in our recent buyers guide.

For consoles, we’ve got a long way to go before the PS4 or X1’s potential is ‘maxed out’. There is a lot of room for optimization, as we’ve discussed several times over – but ultimately gamer’s will have to accept there are limits on the hardware that the developers simply cannot get past. As we’ve pointed out in the resolution part of this article, asking the system to render at say 1080P versus ‘only’ 720P is asking the system to render a lot push a lot more pixels, and this means either lower frame rate or reduced graphical effects.

Frame rate is dependent on the game, titles which require fast paced actions such as fighting games like Street Fighter or FPS such as Call of Duty needs to place a premium on the frame rate to ensure that the game is running as smoothly as possible. With a fighting game for instance, players often rely on punishing another players move in just a few frames of animation, ensuring that a steady and smooth experience are paramount.

We’ve barely started this generation, and it’s fairly obvious there’s a lot of potential remaining on the consoles. Just like the first generation PS3 games didn’t look as good as the final titles on the PS3, or the Xbox 360’s first releases vs the final releases. We’re aware that both the Xbox One will be receiving a ten percent boost available to developers. Meanwhile Sony are working on their API’s, with their ICE Team hard at work improving the performance by leveraging GPGPU.

Developers when creating console titles have a long way to go, and their decisions to go for sub 1080P or settle for “only” 30FPS is a choice they make because they feel it best represents their vision for the game. They know they’ll be plopped in the news due to sub 1080P, hence why many developers are so reluctant to share such details. This is particularly true because when dealing with cross-platform titles, owners of the platform which is deemed to have ‘inferior’

High Quality 60FPS vs 30FPS video Download

Other Articles You Might Like

- Tomb Raider 30FPS on X1 & 60 PS4, Watch Dogs PS4 Version Better Says Insider (14)

- Xbox One eSRAM “Not Complex, Just a Bottleneck”, Claims Developer (14)

- Beyond The Power – What Next Generation Gives You (14)

- Watch Dogs – Ubisoft Clears Up PS4 & Xbox Resolution Mess (14)

- Watch Dogs Playstation 4 & Xbox One Frame Rate Analysis | Tech Tribunal (14)